Guidelines for Reviewers

|

Proposal Review Table of Contents |

Overview

As an ALMA proposal reviewer, you play a key role in ensuring a fair, competitive, and transparent evaluation process. Your rankings and written reviews help select the most compelling proposals and provides valuable guidance to proposers and fellow reviewers.

Peer review is vital but challenging. It requires careful analysis, clear communication, and awareness of potential biases. To ensure fairness, evaluate each proposal based on its merits while minimizing any biases that could unintentionally influence your judgment.

To ensure your reviews are thorough and effective, follow these recommended steps:

Preparation

- Allocate sufficient time: Set aside enough time to thoroughly review the proposals. This includes reading each proposal, drafting your reviews, finalizing your rankings, and re-reading your reviews for clarity and accuracy. For distributed peer review, expect to spend 2-3 days reviewing a Proposal Set (i.e., 10 proposals).

- Mitigate unconscious bias: Unconscious bias can unintentionally influence your evaluations. Recognizing and minimizing this bias is important to ensure fairness. For more information, refer to the section on Unconscious Bias in the Review Process.

- Understand the review criteria: Familiarize yourself with the review criteria for evaluating proposals (see Review Criteria).

- Familiarize yourself with key policies. Please read the Code of Conduct and Confidentiality, policy on Generative Artificial Intelligence use, Criteria for Conflicts of Interest, and Role of Mentors for non-PhD Reviewers to ensure a fair and ethical review process.

Review proposals

- Read the proposals thoroughly: Review the entire proposal, including the abstract, scientific justification, and technical justification.

- Write constructive and clear reviews: Provide specific and actionable feedback. Highlight strengths and weaknesses clearly, offering suggestions for improvement where appropriate. See How to write a useful proposal review for more tips on writing informative reviews.

Rank proposals

- Rank proposals against the review criteria: Rank proposals according to the established criteria, ensuring that your rankings align with the balance of strengths and weaknesses in your review.

Learn from other reviewers

- Learn from other reviewers in Stage 2: Stage 2 offers the opportunity to access the comments from the other reviewers for your assigned proposals, providing valuable insights that can help refine your rankings and reviews. For details on the Stage 2 process for distributed peer review, see here.

The rest of this guide provides additional tips and outlines the relevant policies to help you conduct a thorough and insightful review.

Code of conduct and confidentiality

All participants in the review process are expected to behave in an ethical manner.

- Reviewers will judge proposals solely on their scientific merit.

- Reviewers will be mindful of bias in all contexts.

- Reviewers will declare all major conflicts of interest.

- The proposal reviews will be constructive and avoid any inappropriate language.

- Reviewers will avoid use of generative Artificial Intelligence (GAI) to evaluate or rank proposals (see Generative Artificial Intelligence policy).

All proposal materials related to the review process are strictly confidential.

- The assigned proposals should not be distributed or used in any manner not directly related to the review process.

- Any data, intellectual property, and non-public information shown in the proposals may be used only for the purpose of carrying out the requested proposal review.

- The assigned proposals and the reviews may not be discussed with anyone other than the Proposal Handling Team, or the assigned mentor when applicable.

- Following the GAI policy, reviewers and mentors will not input any part of the assigned proposals into GAI or machine learning tools.

- All electronic and paper copies of the proposal materials must be destroyed as soon as a reviewer completes the proposal review process.

If a reviewer fails to uphold ethical standards or does not complete their reviews in good faith, the proposal(s) for which they are the designated reviewer may be disqualified.

Unconscious bias in the review process

Bias in the review process occurs when a reviewer unknowingly favors or disfavors a proposal for reasons unrelated to its scientific merit. These biases are shaped by personal culture and experiences, making unconscious bias a challenge for all reviewers. Common examples include biases based on culture, age, prestige, language, gender, and institutional affiliation.

As an ALMA reviewer, recognizing and addressing unconscious bias is essential for ensuring a fair and objective review process. Cognitive biases—such as anchoring (overemphasizing initial impressions), confirmation bias (seeking evidence to confirm preexisting beliefs), and the halo effect (allowing a researcher’s reputation to influence evaluations)—can unintentionally undermine the fairness and quality of assessments.

To help reduce these influences, ALMA has adopted a dual-anonymous review system. You can also take the following steps to further mitigate bias:

- Allow Sufficient Time: Avoid rushing through your reviews. Allocate adequate time to evaluate each proposal carefully and critically reflect on your decisions to ensure your feedback is balanced and impartial.

- Use a Structured Approach: Apply the review criteria consistently across all proposals. Take notes and base your feedback on specific examples from the proposal.

- Actively Reflect: Be mindful of how initial impressions or assumptions may influence your evaluation. If you have a strong opinion about a proposal, challenge yourself to consider alternative perspectives.

- Follow dual-anonymous guidelines: Avoid speculating on the identity or affiliation of the proposers.

By implementing these strategies, you can help ensure the integrity, fairness, and quality of the ALMA review process.

Review Criteria

Each proposal contains the following sections, which reviewers are required to read:

- Abstract,

- Scientific Justification,

- Technical Justification.

- Note: The Technical Justification typically provides a detailed justification of the requested sensitivity, angular resolution, and correlator setup, which is important for evaluating the proposal.

Criteria applicable to all proposals

- Scientific Merit

Assess the overall scientific merit of the proposed investigation and its potential contribution to the advancement of scientific knowledge based on the following questions:

- Does the proposal clearly indicate which important, outstanding questions will be addressed?

- Will the proposed observations have a high scientific impact on this particular field and address the specific science goals of the proposal?

Note: ALMA encourages reviewers to consider well-designed high-risk/high-impact proposals even if there is no guarantee of a positive outcome or detection.

- Does the proposal clearly describe how the data will be analyzed in order to achieve the science goals?

- Suitability of Observations

Evaluate the suitability of the observations to achieve the scientific goals considering the following questions:

- Is the choice of target (or targets) clearly described and well justified?

- Are the requested signal-to-noise ratio, angular resolution, largest angular scale, and spectral setup sufficient to achieve the science goals well justified?

- Does the proposal justify why new observations are needed to achieve the science goals?

- For Joint Proposals (see the Proposer’s Guide), does the proposal clearly describe why observations from multiple observatories are required to achieve the science goals?

Important: Base your assessment solely on the content of the proposal according to the above criteria. Proposals may contain references to published papers (including preprints) as per standard practice in scientific literature. Consultation of those references should not, however, be required for a general understanding of the proposal.

Additional criteria for Large Programs

For Large Programs, in addition to the review criteria listed above, reviewers should also consider the following factors:

- Scientific impact

- Does the Large Program address a strategic scientific issue and have the potential to lead to a major advance or breakthrough in the field that cannot be achieved by combining regular proposals?

- Value of data products

- Are the data products that will be delivered by the proposal team appropriate given the scope of the proposal?

- Will these products be valuable and beneficial to the broader scientific community?

- Publication plan

- Is the proposed publication plan appropriate for the scope and goals of the project?

- Team organization and resources

- Is the organization of the team and available computing resources sufficient to complete the project in a timely fashion?

- Team expertise

The APRC will evaluate the team expertise statements for Large Programs to assess if the proposal team is prepared to complete the project in a timely fashion.

The team expertise statements will be evaluated only after the APRC has completed the scientific rankings of the Large Programs. The evaluation of the team expertise statements will not be used to modify the scientific rankings.

Any concerns that the APRC has about the team expertise of a Large Program will be communicated to the ALMA Director, who will make the final decision on whether to accept the proposal.

- Technical and scheduling feasibility

JAO will assess the technical feasibility and scheduling feasibility of the Large Programs and report the results to the APRC.

Notes on review criteria

- Technical Feasibility

- The ALMA Observing Tool (OT) validates most technical aspects of the proposal; e.g., the OT verifies that the angular resolution can be achieved, verifies the correlator setup is feasible, and provides an accurate estimate of the integration time needed to achieve the requested sensitivity. Reviewers should assume that the OT technical validation of the proposal is correct.

- Reviewers should consider in their evaluation if the requested signal-to-noise ratio, angular resolution, largest angular scale, and spectral setup as requested by the PI are sufficient to achieve the scientific goals of the proposal and are well justified.

- Reviewers should not downgrade a proposal based solely on the requested observation time.

- Scheduling Feasibility

- Reviewers should not consider the scheduling feasibility in their rankings. JAO will assess this separately when building the observing queue and forward this information to the PI when needed.

- Dual-anonymous

- Please refer to the dual-anonymous guidelines for reviewers for guides on how to approach the proposal review under the dual-anonymous policy.

- Resubmissions and Joint Proposals

- Resubmissions: Reviewers should evaluate a resubmission of a previously accepted proposal as they would any other proposal according to the review criteria. If the proposal is accepted, any science goals which have already been observed will be descoped.

- Joint Proposals: Joint proposals should be evaluated as any other proposal, following the review criteria stated above.

Technical concerns and reviewer support

In case a reviewer has a technical question about a proposal they are assessing, they can contact the Proposal Handling Team (PHT) through the ALMA helpdesk by opening a ticket to the department called "Proposal Review Support". Additionally:

- Reviewers in the distributed peer review process may also note any technical concerns of a proposal in their comments to the JAO in the Reviewer Tool.

- Members of the ALMA Proposal Review Committee (APRC) may also do so by adding a comment to the PHT in the ARP Meeting Tool during the panel meeting.

Role of the Mentor for non-PhD reviewers

Reviewers participating in the distributed review process who do not have a PhD are required to have a mentor who will assist with the proposal review. The mentor is specified in the OT when preparing the proposal; they are not required to be a member of the proposal team. In general, the mentor provides guidance to the student throughout the review process. They have access to the proposals and the reviews of their mentees through the Reviewer Tool in read-only mode.

Specific roles of a mentor include:

- Work with the reviewer to declare any conflicts of interest on the assigned proposals. The conflicts of interest criteria apply to both the reviewer and the mentor.

- Provide advice to the reviewer as needed on the scientific assessment of the proposals.

- Provide guidance to the reviewer on providing constructive feedback to the PIs.

- Review the comments to the PI before they are submitted.

Criteria for conflicts of interest

The goal of the review assignments is to provide informed and unbiased assessments of the proposals. A major conflict of interest occurs when a reviewer’s personal or work interests would benefit if the proposal under review is accepted or rejected.

To allow better identification of conflicts of interest, a reviewer has the option to provide a list of investigators for whom they have a major conflict of interest. If this list is provided, reviewers will not be assigned a proposal in which a PI, co-PI, or co-I is in their list.

How to set up the list of conflicts of interest

- Reviewers can set their conflicts of interest list through their user preferences on the ALMA Science Portal searching for names in the ALMA user's database.

- Only registered ALMA users need to be included in the list because all proposers, and therefore all reviewers, must be registered.

Who to include in the conflicts-of-interest list

- Close collaborators. Defined as a substantial collaboration on three or more papers within the past three years or an active, substantial collaboration on a current project.

Note: Membership in a large project team on its own does not constitute a conflict of interest

- Students and postdocs under supervision of the reviewer within the past three years.

- A reviewer’s supervisor (for student and postdoc reviewers).

- Close personal ties (e.g., family member, partner) that are ALMA users.

- Other reasons. Any other circumstances where the reviewer believes a major conflict exists.

Identification of conflicts during proposal assignment

Before assigning proposals, the PHT will identify major conflicts of interest based on the following criteria:

- The PI, reviewer, or mentor of the submitted proposal is a PI or co-I of the proposal to be reviewed.

- The PI, one of the co-PIs, or one of the co-Is of the proposal to be reviewed is in the conflicts-of-interest list provided by the reviewer or mentor of the submitted proposal.

- If the reviewer or mentor does not provide such a list, conflicts of interest will be identified as when the reviewer or mentor of the submitted proposal and the PI of the proposal to be reviewed have been a PI/co-PI vs. co-I combination in three or more proposals in the past three cycles (including DDT cycles and supplemental calls).

Declaring additional conflicts of interest

When reviewers receive their proposal assignments, they may identify additional conflicts of interest that were not identified by the above checks. Potential conflicts of interest at this stage include:

- The reviewer is proposing to observe the same object(s) with similar science objectives as the proposal under review.

- The reviewer had provided significant advice to the proposal team on the proposal even though they are not listed as an investigator.

- Any other reasons that the reviewer believes there is a strong conflict of interest.

Reviewers should inform the PHT of any major conflict of interest in their assignments by rejecting the proposal assignment and indicate why they believe a major conflict of interest exists. This is done through the Reviewer Tool (for distributed peer review) or the Assessor Tool (for the APRC). The PHT will evaluate the reported conflict(s). In distributed peer review, if the conflict of interest is approved, a new proposal will be assigned to the reviewer.

Student reviewers participating in distributed peer review must declare any additional conflict that applies to either themselves or to their mentor. Mentors have read-only access to their mentees' proposal sets, thus their possible conflicts of interest with the proposals assigned to their mentees have to be declared by their mentees. Student reviewers should work with their mentor to ensure that the conflicts of interest are identified accurately.

What is not a conflict of interest

- Suspecting the identity of the proposal team

- Lack of expertise with a given proposal scientific category and keyword.

Policy on Use of Generative Artificial Intelligence

ALMA has adopted a policy on the acceptable use of Generative Artificial Intelligence (GAI) in preparing and reviewing ALMA proposals. The policy balances the benefits of GAI with the need to preserve human judgment, scientific expertise, and confidentiality. Reviewers are strongly encouraged to read the full policy available in Appendix C of the ALMA User’s Policies. Below is a summary of the key points relevant to proposal reviewers and mentors:

- GAI tools must not be used to recommend ranks, evaluate proposal strengths and weaknesses, or perform any other evaluation task.

- Reviewers and mentors are strictly prohibited from entering any content from assigned proposals into GAI or machine learning tools, as this breaches the confidentiality of the review process.

- Reviewers may only use GAI tools to correct grammar or improve readability of the reviews. If GAI tools are used, reviewers need to consider that:

- Sensitive information related to proposals must be removed before using GAI tools. No proposal-specific or confidential information may be input into these tools.

- Reviewers are fully responsible for ensuring that any output generated by GAI tools is accurate and complies with ALMA’s guidelines.

Reviewers and mentors must adhere to this policy. Failure to comply may result in the disqualification of the reviewer’s own proposal.

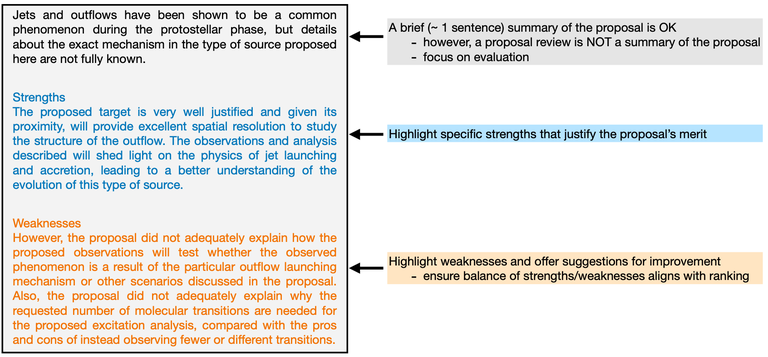

How to write a useful proposal review

Clear and constructive reviews are crucial to maintaining a fair and transparent evaluation process. They help proposers refine future submissions and provide valuable input to other reviewers in the Stage 2 process. All reviews should be written in English.

Guidelines for writing effective reviews

- Highlight strengths and weaknesses

- A review should not simply summarize the proposal. While a brief overview is fine, the majority of the review should focus on the strengths and weaknesses.

- Clearly outline both strengths and weaknesses. This allows the PI to understand what aspects of the project are strong, and where improvements are needed for future proposals.

- Focus on major strengths and major weaknesses. Avoid giving the impression that a minor weakness led to a poor ranking. Many proposals do not have significant weaknesses but are less compelling than others. In such a case, acknowledge that the proposal is good, but note that others were more compelling.

- Ensure that the balance of the strengths and weaknesses align with your ranking of the proposal.

- Be objective and specific

- Offer specific, constructive feedback on how the proposal could be improved.

- When relevant, support your statements with references or evidence.

- Avoid vague statements that could apply to any proposal.

- Be concise.

- The quality of a review is more important than its length. A good review is both concise and informative. In practice, a typical review is about 700-800 characters (6–8 sentences).

- Avoid overly brief reviews (less than a few hundred characters), as they often lack the depth needed to be useful.

- Be professional and constructive.

- Avoid inflammatory or inappropriate language, even if you think the proposal could be significantly improved.

- Do not use sarcasm or any insulting language.

- Be aware of unconscious bias.

- We all have biases and we need to make special efforts to review the proposals objectively. We encourage reviewers to be mindful of unconscious bias during the review process. For more information, see Mitigating Unconscious bias.

- Other best practices.

- Use complete sentences when writing your reviews. We understand that many reviewers are not native English speakers, but aim for correct grammar, spelling, and punctuation.

- Do not include comments about scheduling feasibility. ALMA will assess the scheduling feasibility when building the observing queue and forward this information to the PI when needed.

- Do not include explicit references to other proposals that you are reviewing, such as project codes.

- Do not ask questions in your review. A question is usually an indirect way to indicate there is a weakness in the proposal, but the weakness should be stated directly.

- Critique the proposal and not the proposal team.For example, instead of writing "The PI did not ….", use "The proposal did not ...”.

- Re-read and reflect on your reviews and scientific rankings.

- After completing your assessments, re-read your reviews and imagine how you would feel if you were the recipient. If the reviews seem overly harsh or unclear, revise them to ensure they are constructive and professional.

- Check if the strengths and weaknesses in your review align with the scientific ranking. If there is inconsistency, revise the reviews or the rankings.

Example review

Here is an example review that conforms with the above guidelines.

Common pitfalls

Below is a list of common pitfalls that reviewers should avoid when writing reviews, along with comments or examples of how to rephrase feedback to align with ALMA’s review-writing guidelines.

|

Common Pitfall |

Don’t |

Do |

|

Asking questions instead of identifying weaknesses. |

“Is the signal-to-noise sufficient for the science?”

|

State the weakness directly: “The proposal could have better demonstrated that a signal-to-noise of 5 is sufficient to measure the spectral index accurately and discern between the two models.”

|

|

Criticizing without suggesting improvements. |

“The observing strategy is poor.” |

Explain why the observing strategy is poor: “Since this is a detection experiment, it would be better to request coarse angular resolution (> 1 arcsec) to maximize signal to noise and avoid resolving the source.” |

|

Writing incomplete sentences. |

“Nice proposal.” |

Write complete sentences and explain why this is a nice proposal: “This proposal will help better understand the properties of …” |

|

Using unprofessional or sarcastic language. |

“This proposal has little value.” |

Use professional, constructive language and explain the specific shortcomings of the proposal. |

|

Overemphasis too much on minor issues or non-essential aspects |

“The proposal has typos.”

|

While it is reasonable to mention an excessive number of typos as an additional comment to the PI, weaknesses should only address the scientific merit of the proposal. |

|

Critiquing the PI and not the proposal. |

“The PI could have better justified ...” |

Focus on critiquing the proposal itself, not the PI: “The proposal could have better justified ...” |

Return to the main ALMA Proposal Review page